In the ever-evolving landscape of software development, the need for a well-defined Test Strategy in Software Testing is paramount. This need stems from the growing complexity of software systems and the critical role they play in the functioning of modern businesses and everyday life. A Test Strategy serves as a roadmap, providing clear guidelines and a structured approach to the testing process. It ensures that all testing activities are aligned with the project’s objectives and business goals.

Without a Test Strategy, testing efforts can become disjointed and inefficient, leading to gaps in coverage, redundant testing efforts, and ultimately, a higher risk of software defects slipping through into production. The strategy helps in optimizing resource allocation and time management, ensuring that testing is both effective and economical.

Table of Contents

ToggleWhat is a Test Strategy?

To know What is a Test Strategy? It is a crucial in managing the risks associated with software development. It identifies potential risks in the testing process and outlines strategies for managing these risks, thereby minimizing the likelihood of project delays and cost overruns.

The document also sets the stage for a consistent testing methodology across the organization, fostering best practices and standardization. This consistency is vital in maintaining the quality of software over time, especially in large organizations where multiple teams work on different aspects of the same project. In essence, a Test Strategy is not just a tool for planning but a critical component in ensuring the delivery of high-quality, reliable software in a timely and cost-effective manner.

Test Strategy vs. Test Plan: Key Differences

Understanding how a Test Strategy compares to a Test Plan helps clarify their distinct contributions to the software testing process. Though both are essential for successful QA, they serve different roles and audiences. Here’s how they differ across several important dimensions:

Purpose and Focus

A Test Strategy offers a broad, high-level perspective. It defines the overarching approach, main objectives, and the general scope of testing across the entire project. Think of it as the guiding framework that shapes the organization’s philosophy toward quality assurance: what types of testing will be carried out, at which levels, and using which methods.

In contrast, a Test Plan zooms in on the specifics. It spells out the detailed test objectives, step-by-step procedures, which test cases to run, the test data to use, and the expected results. Where a Test Strategy charts the entire course, the Test Plan plots each step of the journey.

Audience and Scope

The Test Strategy is largely crafted for stakeholders, project managers, and senior QA leaders—essentially, those shaping or overseeing quality at a high level. It sets standard practices for a whole project or even the organization.

On the other hand, the primary audience for a Test Plan includes hands-on testers, test leads, and anyone participating directly in the testing effort. Its scope is narrower, often addressing a particular feature, phase, or component rather than the whole system.

Level of Detail and Flexibility

Because it serves as a top-level roadmap, the Test Strategy tends to remain more abstract and less detailed. This abstraction gives room for tailoring as projects develop and as new challenges arise.

In contrast, a Test Plan is far more concrete. It lays out step-by-step instructions, fully defined test cases, scripts, and thorough procedures. While this makes execution simpler for testers, it also means that the plan is less flexible and cannot pivot easily if requirements change late in the development process.

Longevity

A Test Strategy typically remains consistent throughout the lifecycle of a project, maintaining its high-level vision even as some details evolve below the surface. Meanwhile, a Test Plan is a living document: it evolves with ongoing feedback, adapting to discoveries made during test execution and shifting project needs.

By recognizing these distinctions, teams can develop a cohesive quality process that balances strategic direction with tactical execution—ensuring no detail slips through the cracks and the broader organizational priorities remain in view.

Common Risks in Software Testing

When crafting a Test Strategy, it’s essential to identify and anticipate the typical risks that may arise during the software testing process. Here are some common concerns that frequently impact software quality:

- Payment Gateway Instability: Transaction failures can occur when the payment gateway struggles under heavy traffic, leading to customer frustration and lost revenue.

- Cross-Browser Compatibility Issues: Different web browsers (especially older versions of Chrome, Firefox, Safari, and Edge) may render your application inconsistently, resulting in a fragmented user experience.

- High User Load: As concurrent users increase—particularly with numbers above 5,000—system performance may degrade noticeably, causing slow response times or even outages.

- Security Vulnerabilities: New features, such as revamped user authentication processes, may introduce unforeseen security gaps, putting sensitive user data at risk.

Recognizing these risks early allows your team to proactively design mitigation strategies, ensuring a smoother and more reliable path to software delivery.

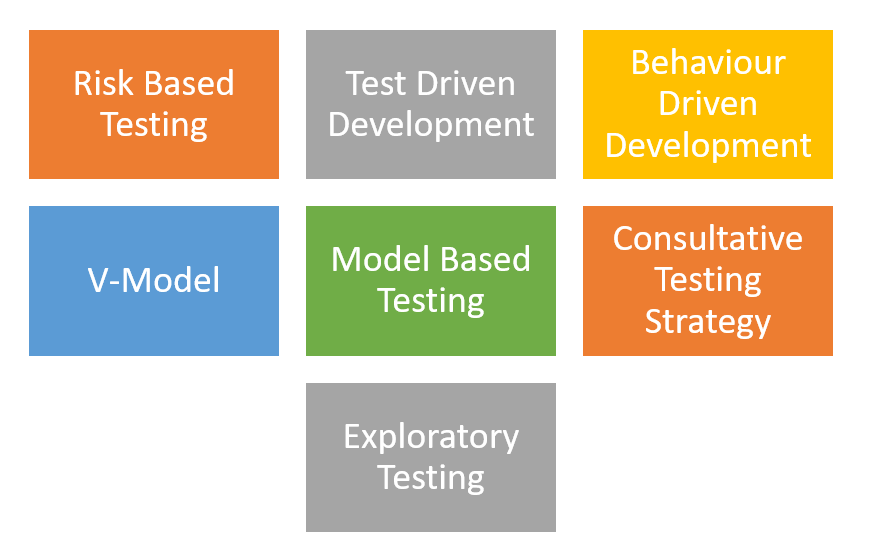

Types of Test Strategy in Software Testing

In the intricate world of software development, the adoption of a suitable testing strategy is pivotal for ensuring the quality and reliability of the final product. Each testing strategy offers a unique approach and caters to different aspects of the software development lifecycle. Let’s delve into some of the prominent types of Test Strategy in Software Testing that have significantly shaped modern software testing practices.

The Importance of Combining Preventive and Reactive Testing

While preventive testing helps uncover issues before they take root, real-world software projects are full of surprises—think of it as debugging in the wild. That’s where reactive testing steps in, catching defects that slip past even the best-laid plans. Together, these strategies help teams identify both anticipated and unexpected issues, cover gaps in test design, and adjust to shifting project realities.

By leveraging both approaches, teams reduce the risk of severe defects derailing a release. This dual strategy is particularly vital for complex applications, as it gives you the flexibility to adapt and respond when unforeseen challenges arise while maintaining a proactive stance against future problems.

Preventive vs. Reactive Test Strategies: Understanding the Difference

When considering managing quality in software development, teams often debate between preventive and reactive testing strategies.

A preventive test strategy focuses on foreseeing and addressing potential issues before they materialize. This often involves careful planning, risk assessment, and designing test cases early—ideally before the code is even written. The goal here is to nip problems in the bud, which can minimize costly defects further down the line.

In contrast, a reactive test strategy comes into play after the software is built or during its integration. This approach accepts that some issues won’t become visible until the system runs in a real or test environment. Reactive testing is about identifying and swiftly resolving unexpected defects that slip through earlier phases, adapting to what’s discovered during or after deployment.

In practice, most robust QA processes blend both approaches. Preventive strategies provide a safety net up front, while reactive strategies ensure teams can quickly handle surprises—because no matter how thorough the planning, real-world use always brings a few curveballs. This combination is crucial for maintaining the consistency and resilience of software quality efforts.

1. Risk-Based Testing: Software Test Strategy

This is a Software Test Strategy that prioritizes testing activities based on the risk assessment of various software components. In this approach, components that are deemed high risk – due to factors like complexity, business importance, or past defects – are tested more rigorously and earlier in the testing cycle. This strategy is effective in optimizing resources and time, ensuring that the most critical parts of the application are robust and reliable.

2. Consultative Test Strategy and Test Plan

This Consultative Test Strategy and Test Plan involves a collaborative approach where stakeholders, including business analysts, developers, and users, are actively involved in the testing process. This strategy leverages the diverse perspectives and expertise of all stakeholders to identify key areas of focus, potential risks, and the most appropriate testing methodologies. It fosters a shared understanding of the project goals and ensures that the testing aligns with business requirements and user expectations.

3. Exploratory Testing

This is an approach where testers dynamically explore and test the software without predefined test cases or scripts. This strategy relies heavily on the tester’s experience, intuition, and creativity. It is particularly effective in uncovering issues that may not be easily identified through structured testing approaches. Exploratory testing is often used in conjunction with other testing strategies to enhance test coverage and discover unexpected or hidden issues.

4. V-Model Testing Strategy

This is a development methodology that emphasizes the parallel relationship between development stages and their corresponding testing phases. Each development phase, such as requirements specification or design, has a corresponding testing phase, like acceptance testing or system testing. This model ensures that testing is integrated throughout the development process, facilitating early detection and resolution of defects.

5. Behavior-Driven Development (BDD) Testing

This Test Strategy in Software Testing focuses on the behavior of the software from the user’s perspective. In BDD, testing begins with the definition of expected behavior and user stories. Tests are then designed to validate that the software behaves as expected in various scenarios. This approach bridges the gap between technical and non-technical stakeholders, ensuring that the software meets the intended user requirements.

6. Test-Driven Development (TDD)

This is an approach where test cases are written before the actual code is developed. Developers first write a failing test case that defines a desired improvement or new function, then produce the minimum amount of code to pass the test, and finally refactor the new code to acceptable standards. TDD promotes simple designs and inspires confidence in the software’s functionality.

7. Model-Based Testing Strategy

involves creating models that represent the desired behavior of the system under test. These models are used to generate test cases automatically. This approach is particularly useful for complex systems, as it helps visualize the requirements and develop comprehensive test scenarios.

Each of these Software Testing strategies offers distinct advantages and can be chosen based on the specific requirements, complexity, and context of the software project. The key is to understand the nuances of each strategy and apply them judiciously to enhance the quality, efficiency, and effectiveness of the software testing process.

Shift-Left Testing: Integrating Quality Early

Shift-left testing is a foundational concept in modern test strategies, transforming how quality assurance is approached within software development. Rather than waiting for development to finish before beginning tests, the shift-left methodology brings testing activities forward, embedding them from the earliest stages of the development lifecycle.

This proactive approach encourages immediate close collaboration between testers and developers. By testing requirements, designs, and code as soon as they are available, teams can catch defects earlier, when they are typically easier and less expensive to resolve.

Adopting a shift-left strategy means:

- Detecting issues sooner minimizes costly rework down the line.

- Empowering teams to identify ambiguities in requirements or design before code is even written.

- Enabling a culture of continuous feedback and improvement.

Ultimately, shift-left testing aligns with agile and DevOps practices, promoting faster releases without sacrificing quality. It is an essential test strategy for teams aiming to deliver robust, reliable software at pace—helping to create resilient products while optimizing both time and resources.

Static and Dynamic Testing Approaches: Understanding Their Benefits

Regarding software testing, two foundational approaches take center stage: static and dynamic testing. Each offers its own unique strengths, contributing essential value to the quality assurance process.

Without executing the program, static testing evaluates the software’s artifacts, such as code, requirements, and design documents. Think of it as inspecting the blueprints before building a house. This method helps teams detect errors, ambiguities, and inconsistencies early on, significantly reducing the cost and effort of correcting defects later in the development cycle. Peer reviews, walkthroughs, and static code analysis tools like SonarQube or Coverity exemplify this approach, enabling organizations to catch potential pitfalls before they cause real-world issues.

Dynamic testing, by contrast, brings the software to life—executing code and observing actual behavior under various conditions. This approach is vital for verifying that the application functions as intended and meets user expectations. From automated unit tests with frameworks like JUnit to comprehensive system and user acceptance testing, dynamic testing uncovers bugs and usability issues that static methods may miss, ensuring the software performs reliably in real-world scenarios.

By integrating both static and dynamic testing approaches, organizations create a robust safety net—catching defects early, minimizing rework, and ultimately delivering higher quality, more dependable software to users.

What is the Difference Between Static and Dynamic Test Strategies?

When discussing test strategies, it’s important to distinguish between static and dynamic approaches, as each plays a vital role in the overall quality assurance process.

Static test strategies involve examining code, requirements, or design documents without actually executing the program. Think of this as a proactive approach—reviews, walkthroughs, and inspections are conducted to identify potential flaws early, much like proofreading a manuscript before it goes to print. This can save valuable time and resources by catching issues before a single line of code runs on a machine.

On the other hand, dynamic test strategies require running the software to observe its behavior in real-time scenarios. Testers execute the program, interact with various features, and evaluate the system’s responses. It’s similar to test-driving a new car—you want to know what the manual says and how it performs on the road.

In summary:

- Static strategies help spot defects and inconsistencies at an early stage, often preventing costly fixes down the line.

- Dynamic strategies ensure the software functions as expected when tested in real-world conditions.

Both methods are essential to a practical test strategy, working together to help teams build robust and reliable applications.

Parallel Test Execution and AI-Powered Enhancements

Modern testing platforms significantly improve efficiency through parallel test execution and the integration of AI-driven features. When tests are executed in parallel across multiple browsers, devices, and operating systems, teams can rapidly validate software behavior in various real-world environments. This not only reduces overall testing time but also ensures broader coverage without the bottleneck of sequential test runs.

AI-powered capabilities, such as intelligent wait mechanisms, self-healing locators, automated scheduling, and robust test maintenance, further streamline the process. Features like Smart Wait dynamically handle timing issues by synchronizing test actions with application loading times, minimizing flaky test results. Meanwhile, self-healing locators automatically adjust to application user interface changes, reducing manual intervention and maintenance overhead.

With these advancements, testers can focus on expanding coverage and identifying edge cases rather than repeatedly troubleshooting environmental inconsistencies or brittle test scripts. This cohesive approach leads to increased productivity, faster feedback cycles, and ultimately, higher confidence in release quality.

Template for Test Strategies in Software Testing

1.0 Overview

1.1 Purpose of the Document

The purpose of this document is to delineate a comprehensive framework for Test Strategies in Software Testing. It serves as a guiding beacon for the testing team, ensuring that the testing process is aligned with the overarching project goals and business objectives. This document is instrumental in laying down a structured and uniform approach to software testing.

1.1.1 Review and Approval

To maintain clarity, alignment, and quality across the project, the test strategy document should be thoroughly reviewed and formally approved by key stakeholders. This typically includes representatives from the business team, the QA Lead, and the Development Team Lead. Their combined oversight ensures the strategy is comprehensive, feasible, and tailored to the project’s needs—bridging the gap between business priorities and technical execution.

1.2 Project Introduction

This segment provides a succinct introduction to the project, outlining its objectives, key functionalities, and its pivotal role in the broader business context. Setting the stage for the testing approach and contextualizing the testing activities within the larger project framework is essential.

1.3 Document Scope

This part of the document defines its boundaries, specifying the extent and limitations of the testing strategies within. It clarifies the document’s applicability, ensuring that the testing approach is tailored and relevant to the specific needs of the project.

2.0 Scope of Testing

2.1 System Overview

This section describes the system or application under test in detail, covering both its technical and functional aspects. This overview is crucial for understanding the system’s architecture, dependencies, and the intricacies that may influence the testing approach.

2.2 Levels & Type of Testing to be Performed

This subsection details the various levels of testing, such as unit, integration, and system testing, as well as the types of testing, like functional and regression testing. This comprehensive overview ensures a thorough and systematic approach to testing all aspects of the software.

2.3 Types of Testing – Out of Scope

This part explicitly outlines the types of testing that are not applicable or relevant to the project. It serves to focus the testing efforts by eliminating unnecessary activities, thereby optimizing resources and time.

2.4 Inclusions

This segment specifies the features or components of the application that are included in the testing scope. It ensures that all critical aspects of the software are covered, thereby mitigating the risk of missed defects.

2.5 Exclusions

The exclusions section clearly lists out the elements of the software that are not to be tested. This clarification prevents any misallocation of testing resources and ensures that the team’s focus is directed appropriately.

3.0 Testing Items

This section enumerates the specific items or components that are to be subjected to testing. It includes detailed information on their versions, configurations, and any other relevant details, ensuring a comprehensive understanding of what is to be tested.

4.0 Testing Strategy

4.1 Testing Mode

Defines the mode of testing to be employed, whether manual, automated, or a hybrid approach. This decision is crucial as it influences the resource allocation, tool selection, and overall testing process.

4.1.1 Evaluating Return on Investment (ROI) for Test Automation

Assessing the return on investment (ROI) for test automation is a pivotal step when determining the most effective testing mode for your project. A robust ROI analysis enables informed decisions about investing time and resources into automation versus relying on manual testing approaches.

To evaluate ROI for test automation, consider the following key factors:

- Initial Investment: Calculate the upfront costs, including tool licensing (e.g., Selenium, TestRail), hardware, framework development, and staff training.

- Ongoing Maintenance: Estimate the effort and expenses involved in maintaining test scripts, updating frameworks, and managing integrations as your system evolves.

- Test Execution Time: Automated suites typically execute faster than manual testing, reducing test cycle duration, especially during regression cycles.

- Frequency of Test Reuse: The more often you can reuse automated tests across builds and releases—the greater the cumulative savings.

- Defect Detection Rates: Automated testing enhances early defect detection, reducing the cost and effort of fixing issues later in the lifecycle.

A typical approach involves comparing the cost of running manual tests over multiple cycles with the total cost of implementing and maintaining automated tests. ROI is greater when using automated suites:

- Significantly reduce manual effort,

- Increase test coverage,

- Shorten release cycles, and

- Improve software quality through more consistent and repeatable testing.

Ultimately, a well-justified investment in test automation yields long-term benefits. By systematically weighing costs against time savings, defect reduction, and scalability, organizations can clearly demonstrate the tangible value automation brings to the software development process.

4.2 Execution Strategy

This part outlines the strategy for executing the tests. It covers the sequence in which test cases will be executed, the prioritization of testing activities, and how the tests will be rolled out across different stages of the project.

4.3 Testing Methodology – Lifecycle

4.3.1 Test Entry and Exit Criteria

Specifies the criteria that signify the beginning and end of the testing phases. Entry criteria could include test environment readiness, whereas exit criteria might encompass achieving a certain percentage of passed test cases.

4.3.2 Testing Deliverables

Lists all the deliverables associated with the testing process, such as test plans, test cases, bug reports, and final test summaries. This ensures a tangible output from the testing activities.

4.4 Reference Materials and External Guidelines

A robust test strategy draws on various reference materials and industry guidelines to ensure best practices are followed throughout the testing process. Useful materials typically include:

- Internal Documentation: Detailed product and API documentation is the primary source for understanding system architecture, expected behaviors, and integration points.

- Testing Tool Manuals: Comprehensive guides for tools such as Selenium or JMeter provide valuable insights for configuration, scripting, and optimizing automated and performance testing workflows.

- Industry Standards: Adhering to established external frameworks, such as the OWASP guidelines for security testing, helps ensure that test efforts meet recognized quality and security benchmarks.

By leveraging internal resources and authoritative external guidelines, the testing team is better equipped to design thorough and effective test cases, ultimately enhancing product reliability and compliance.

4.5 Testing Limitations and Risks

Identifies potential limitations and risks in the testing process, along with strategies for their mitigation. This proactive approach is essential for effectively managing unforeseen challenges.

4.6 Resource Requirement, Allocation & Training Needs

Details the resources required for testing, including human resources, technology, infrastructure, and any training needs. This ensures that the testing team is adequately equipped to conduct the testing activities efficiently.

4.7 Assumptions, Dependencies, and Constraints

Outlines any assumptions, dependencies, and constraints impacting the testing process. This section is critical for setting realistic expectations and planning for contingencies.

5.0 Test Environment, Tools, and Data

5.1 Hardware Environment

Specifies the hardware requirements for establishing the test environment, ensuring that the testing infrastructure is robust and capable of handling the software application.

5.2 Software Environment

Details the software, operating systems, databases, and other necessary tools that form the software environment for testing. This Test Strategy in Software Testing ensures that the test environment closely simulates the production environment.

5.3 Network and Communication Environment

Describes the network setup and communication infrastructure required for the test environment, ensuring seamless connectivity and interaction during the testing process.

5.4 Tools

Lists the tools and technologies that will be utilized for various purposes like test management, automation, defect tracking, etc., thereby equipping the testing team with the necessary tools to execute tests effectively.

5.5 Test Data

Outlines the approach for managing test data, including its creation, maintenance, and protection, ensuring the availability of high-quality and relevant data for effective testing.

6.0 Defect Management

6.1 Defect Life Cycle Process

Describes the process that a defect follows from its identification to closure. This process is crucial for tracking and managing defects effectively throughout the testing cycle.

6.2 Severity Levels

Defines the severity levels for defects, enabling prioritization of defect fixing based on the impact and urgency of the defect.

6.3 Defect Priority

Specifies the criteria for assigning priority levels to defects, facilitating efficient defect management and resolution.

7.0 Test Control

7.3 Test Suspension Criteria

Outlines the conditions under which testing activities may be temporarily suspended, ensuring that testing is halted only under predefined circumstances.

7.4 Test Resumption Criteria

Specifies the conditions that must be met to resume testing after a suspension, ensuring a structured approach to restarting testing activities.

8.0 Communication

8.1 Status Reporting and Frequency

Defines the approach for reporting the status of testing activities, including the frequency and format of the reports, ensuring that all stakeholders are kept informed about the testing progress.

8.2 Metrics to be Captured

Lists the key metrics and performance indicators to be captured during the testing process, providing a quantitative measure of the testing effectiveness and efficiency.

The Test Strategy in the Software Testing Document is a pivotal element in software testing. It ensures that testing activities are conducted in a structured, efficient, and goal-oriented manner. It serves as a comprehensive guide, outlining every aspect of the testing process, from planning to execution, thereby ensuring the delivery of high-quality software.

In conclusion, a well-crafted Test Strategy in Software Testing is an indispensable component of the software development lifecycle, acting as a guiding light for quality assurance teams. It streamlines the testing process and ensures that every action taken aligns with broader project goals and business objectives. A Test Strategy minimizes uncertainties and maximizes efficiency by addressing key aspects such as the scope of testing, methodologies employed, resource allocation, and risk management.

As software systems continue to become more complex, the role of a comprehensive Test Strategy becomes increasingly vital. It is the foundation upon which robust, reliable, and high-performing software systems are built, ensuring they meet user expectations and thrive in today’s competitive digital landscape.

| You May Also Be Interested to Know- | |

| 1. | Test Case Design |

| 2. | Software Development Engineer Test |

| 3. | Mobile Testing Tools Automation |